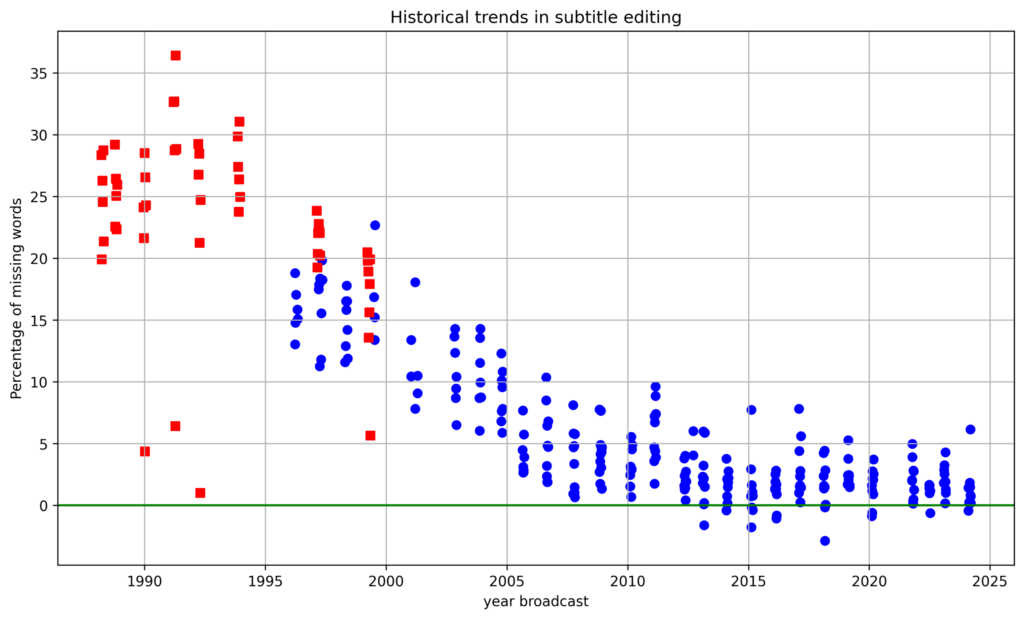

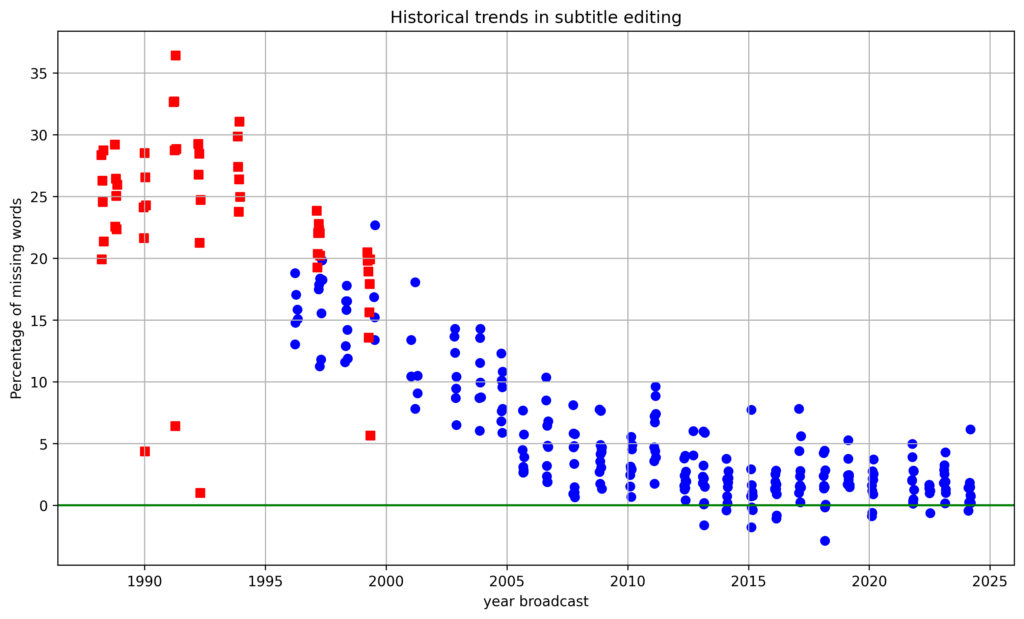

One of the problems with plotting out subtitle data over a 24 hour period is working out which programmes are causing problems. The answer is to add EPG data to plots and working out how to get the text onto the plots in a readable form.

The result isn’t particularly elegant, because each plot is different so the positioning of the text can overlap with the plot. In the end I changed the colour of the plot and overlayed black text to make it readable. This mostly works, except for programmes lasting 10 minutes or less where the text overlaps.

The above plot is a record of subtitle delay on BBC One for the 7th January 2025. It shows approximately zero delay up till 01:30 when BBC One joins BBC News and the characteristic 4 second delay of the BBC News ASR trial is seen. Then at 06:00 the delay becomes larger and more variable because Breakfast is subtitled by humans using a mixture of autocue and scripts along with respeaking. The delay then drops slightly for Morning Live and then back to zero for the prerecorded programmes. You can also see the delay go back up during the live News bulletins at 13:00, 18:00 and 22:00, the One Show at 19:00 and Match of the Day running up to midnight.

Plotting the average word rates over 5 minute segments for the subtitles and transcript reveals some interesting contrasts in the plot above. While in the subtitles are seen to roughly keep track with the speech most of the time, three programmes stand out as causing problems, Match of the Day, Breakfast and most noticeably, Morning Live where the speech rate far exceeds the subtitle word rate.

Because the programme boundaries are now available it is also possible to plot the average word rates for each programme, see above. In this plot you don’t see the very high peak speech rates in sections of Morning live, but you can clearly see that while the average speech rate is around 200 wpm, the subtitles only manage about 160 wpm. This means that in total around 20% of the words spoken are missing from the subtitles and in some segments of the programme it is much higher.

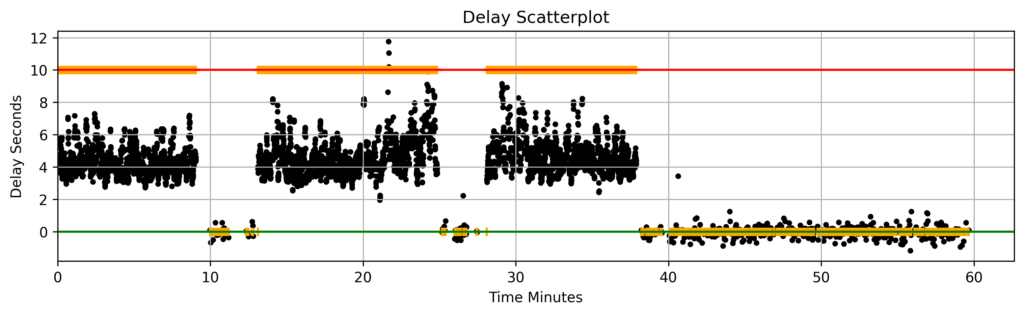

The delay plot for ITV 1 on the 19th January shows some similar patterns and some key differences. There are no subtitles between midnight and 03:00 during Shop on TV, and Unwind with ITV from around 03:50 to 05:00 contains no speech. The programmes at 03:00 and 05:00 are prerecorded and the delay spikes are likely to be caused by anomalies caused by the commercial breaks. Good Morning Britain goes live at 06:00and the delay is around 6 seconds, dropping to zero when adverts are subtitled and there is a large delay peak of over 30 seconds just after 09:00.

By looking at the scatter plot of delay for 09:00 to 10:00, above, it would appear this peak was caused by a technical fault, because the delay rises almost linearly before dropping back. This must be some form of buffering or rate limiting downstream of the subtitler.

ITV1 live programming continues with Lorraine at 09:30, This Morning at 10:00 and then Loose Women at 12:30 where the subtitle delay averages around 12 seconds for around 15 minutes from 13:00 onwards. This might be down to the delay in the programme feed reaching one of the subtitlers working on the programme, or some other technical issue. The delay drops back below 10 seconds for the lunchtime news before prerecorded programmes take over at 14:00. at 18:00 the subtitle delay jumps to a very consistent 5 seconds for the regional news. This is consistent with the use of ASR generated subtitles for all their regional news bulletins. The delay is more variable for the national news that follows and again for the news at 22:00.

The word rate plots for commercial channels have to be interpreted with caution, because most TV adverts do not contain subtitles. This reduces the average subtitle word rate below that of the programme itself and makes the programme averages less useful. However, from the 5 minute word rate averages, it is clear that the subtitles fall behind the speech most noticeably during This Morning.

The similarity with BBC One’s Morning live is striking. These are similar programmes and clearly both present a very difficult challenge for subtitlers. These programmes are both factual and aimed at an audience that is quite likely to rely on subtitles. These programmes and others like them should be the focus for research into improving subtitle quality, because any techniques that could be found to improve the quality of subtitles for this material would benefit many other less challenging live programmes. This is the kind of problem that might benefit from the use of programme specific machine learning along with helper material like scripts alongside the used of cutting edge speech to text. However, because this is a good example of a messy, hard problem I suspect it is one many academics will avoid because of the high risk of failure.

So the 24 hour plots are made far more informative by the addition of the EPG data. The EPG data helps highlight which types of programming present the biggest challenges for subtitling and are starting to indicate where future research should focus.